Fault tolerance and high availability in SolrCloud

Whenever SolrCloud is restarted, election happens again. If a particular shard that was a replica earlier comes up before the shard that was the leader, the replica shard becomes the leader and the leader shard becomes the replica. Whenever we restart the Tomcat and ZooKeeper servers for starting SolrCloud, we can expect the leaders and replicas to switch.

Let us check the availability of the cluster. We will bring down a leader node and a replica node to check whether the cluster is able to serve all the documents that we have indexed. First check the number of documents in mycollection:

http://solr5:8080/solr/mycollection/select/?q=*:*

We can see that there are nine documents in the collection. Similarly, run the following query on catcollection:

http://solr5:8080/solr/catcollection/select/?q=*:*

The catcollection contains 11 documents.

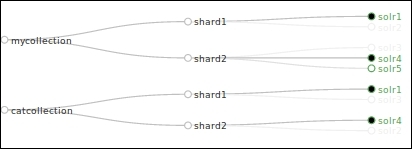

Now let us bring down Tomcat on solr2 and solr3, or simply turn off the machines. Check the SolrCloud graph:

We can see that the nodes solr2 and solr3 are now in the Gone state. Here solr3 was the leader for shard2 in mycollection. Now since it is offline, solr4 is promoted as the leader. The replica of shard1 that was solr2 is not available, so solr1 remains the leader.

Let us execute the queries we had executed earlier on both the collections. We can see that the count of documents in both the collections remains the same.

We can even add documents to SolrCloud during this time. Add the ipod_other.xml file from the example/exampledocs folder inside the Solr installation to mycollection on SolrCloud. Execute the following command:

$ java -Durl=http://solr5:8080/solr/mycollection/update -jar post.jar ipod_other.xml SimplePostTool version 1.5 Posting files to base url http://solr5:8080/solr/mycollection/update using content-type application/xml.. POSTing file ipod_other.xml 1 files indexed. COMMITting Solr index changes to http://solr5:8080/solr/mycollection/update.. Time spent: 0:00:07.978

Now again run the query to get the complete count from mycollection:

http://solr5:8080/solr/mycollection/select/?q=*:*

The mycollection collection now contains 11 documents.

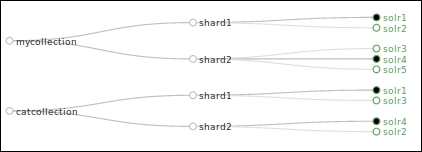

Now start up Tomcat on solr2 and solr3. We can see that after a short time in recovery, both the Solr instances are now added to the cloud. For mycollection, solr2 continues its role as the replica of solr1 for shard1, and solr3 becomes the replica of solr4 for shard2:

The documents that were added to the cloud while any of the nodes were down are automatically replicated onto the nodes once they come back into the cloud. Therefore, as long as one of the nodes for the shard of a collection is available, the collection will remain available and will continue to support indexing and searching of documents.