Advanced sharding with SolrCloud

Let's explore some of the advanced concepts of sharding, starting with shard splitting.

Shard splitting

Let us say that we have created a two-shard replica looking at the current number of queries per second for a system. In future, if the number of queries per second increases to, say, twice or thrice the current value, we will need to add more shards. Now, one way is to create a separate cloud with say four shards and re-index all the documents. This is possible if the cluster is small. If we are dealing with a 50 shard cluster with more than a billion documents, re-indexing of the complete set of documents again may be expensive. For such scenarios, SolrCloud has the concept of shard splitting.

In shard splitting, a shard is divided into two new shards on the same machine. All three shards, the old one and the two new ones, remain. We can check the sanity of the shards and then delete the existing shard. Let us see a practical implementation of the same.

Before starting, lets add a few more documents into mycollection. Add the books.csv file from the example/exampledocs folder to mycollection.

java -Dtype=text/csv -Durl=http://solr1:8080/solr/mycollection/update -jar post.jar books.csv To check the number of documents in mycollection, execute the following query:

http://solr1:8080/solr/mycollection/select/?q=*:*

We can see that there are 35 documents currently in mycollection. Let us check the count of documents in each shard. Execute the following queries to find the documents in shard1 of mycollection:

http://solr1:8080/solr/mycollection/select/?q=*:*&shards=shard1

There are 17 documents in shard1. Now execute the following query to find the number of documents in shard2 of mycollection:

http://solr1:8080/solr/mycollection/select/?q=*:*&shards=shard2

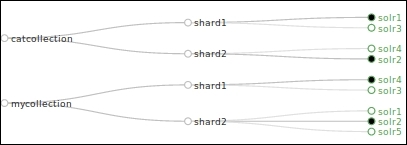

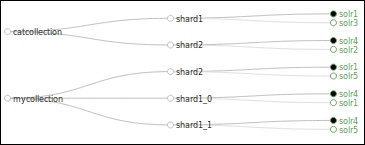

We can see that there are 18 documents in shard2. Let us also look at how our SolrCloud graph looks. We can see that shard1 is on solr3 and solr4 and shard2 is on solr1, solr2, and solr5:

Now let us split shard1 into two parts. This is done by the SPLITSHARD action on the collections API via the Solr admin interface. Execute the command by calling the following URL:

http://solr1:8080/solr/admin/collections?action=SPLITSHARD&collection=mycollection&shard=shard1

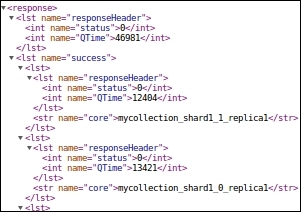

The output of the command is seen on the browser. We can see that shard1 is split into two shards shard1_0 and shard1_1:

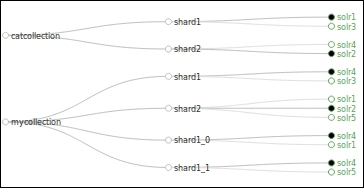

While the query for shard splitting is being executed, the shard does not go offline. In fact, there is no interruption of service. Let us also look at the SolrCloud graph that would contain information on the split shards.

We can see that shard1 has been split into shard1_0 and shard1_1. Even the shards that have been split have their leaders and replicas in place. Shard1_0 has solr4 as the leader and solr1 as the replica. Similarly, shard1_1 has solr4 as the leader and solr5 as the replica. To check the number of documents in the split shards, execute the following queries:

http://solr1:8080/solr/mycollection/select/?q=*:*&shards=shard1_0

Shard1_0 contains eight documents.

http://solr1:8080/solr/mycollection/select/?q=*:*&shards=shard1_1

Also, shard1_1 contains nine documents. In all, the split shards now contain 17 documents that shard1 had earlier. In addition to splitting shard1 into two sub shards, SolrCloud makes the parent shard, shard1, inactive. This information is available in the ZooKeeper servers. Connect to any of the ZooKeeper servers and get the clusterstate.json file to check the status of the shards for mycollection:

"mycollection":{ "shards":{ "shard1":{ "range":"80000000-ffffffff", "state":"inactive", "replicas":{ "core_node1":{ "state":"active", "base_url":"http://solr4:8080/solr", "core":"mycollection_shard1_replica1", "node_name":"solr4:8080_solr", "leader":"true"}, "core_node4":{ "state":"active", "base_url":"http://solr3:8080/solr", "core":"mycollection_shard1_replica2", "node_name":"solr3:8080_solr"}}},Now, shard1_0 and shard1_1 are marked as active:

"shard1_0":{ "range":"80000000-bfffffff", "state":"active", "replicas":{ "core_node6":{ "state":"active", "base_url":"http://solr4:8080/solr", "core":"mycollection_shard1_0_replica1", "node_name":"solr4:8080_solr", "leader":"true"}, "core_node8":{ "state":"active", "base_url":"http://solr1:8080/solr", "core":"mycollection_shard1_0_replica2", "node_name":"solr1:8080_solr"}}}, "shard1_1":{ "range":"c0000000-ffffffff", "state":"active", "replicas":{ "core_node7":{ "state":"active", "base_url":"http://solr4:8080/solr", "core":"mycollection_shard1_1_replica1", "node_name":"solr4:8080_solr", "leader":"true"}, "core_node9":{ "state":"active", "base_url":"http://solr5:8080/solr", "core":"mycollection_shard1_1_replica2", "node_name":"solr5:8080_solr"}}}},Deleting a shard

Only an inactive shard can be deleted. In the previous section, we found that since shard1 was split into shard1_0 and shard1_1, shard1 was marked as inactive. We can delete shard1 by executing the DELETESHARD action on the collections API:

http://solr1:8080/solr/admin/collections?action=DELETESHARD&collection=mycollection&shard=shard1

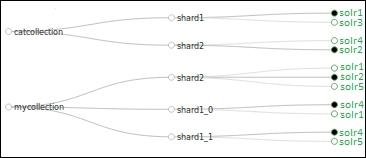

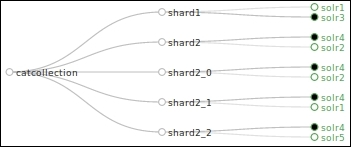

The following is a representation of the SolrCloud graph after the deletion of shard1:

Moving the existing shard to a new node

In order to move a shard to a new node, we need to add the node as a replica. Once the replication on the node is over and the node becomes active, we can simply shut down the old node and remove it from the cluster.

In the current cluster, we can see that shard2 has three nodes—solr1, solr2, and solr5. We added solr5 some time back as a replica for shard2. In order to remove solr2 from mycollection, all we need to do is use the DELETEREPLICA action on the collections API:

http://solr1:8080/solr/admin/collections?action=DELETEREPLICA&collection=mycollection&shard=shard2&replica=core_node3

The name of the replica is obtained from clusterstate.json in the ZooKeeper cluster:

"mycollection":{ "shards":{ "shard2":{ "range":"0-7fffffff", "state":"active", "replicas":{ "core_node2":{ "state":"active", "base_url":"http://solr1:8080/solr", "core":"mycollection_shard2_replica1", "node_name":"solr1:8080_solr", "leader":"true"}, "core_node3":{ "state":"active", "base_url":"http://solr2:8080/solr", "core":"mycollection_shard2_replica2", "node_name":"solr2:8080_solr"}, "core_node5":{ "state":"active", "base_url":"http://solr5:8080/solr", "core":"mycollection_shard2_replica3", "node_name":"solr5:8080_solr"}}},The graph now shows solr2 is removed from mycollection:

In this case, solr2, which is also a node in catcollection is still active.

Shard splitting based on split key

Split key-based shard splitting is a viable option. A split key can be used to route documents on the basis of certain criteria to a shard in SolrCloud. In order to split a shard by using a shard key, we need to specify the shard.key parameter along with the collection parameter in the SPLITSHARD action of the collections API.

We can split catcollection into more shards using category as the split.key parameter. The URL for splitting the shard will be:

http://solr1:8080/solr/admin/collections?action=SPLITSHARD&collection=catcollection&split.key=books!

Once the query has been executed, we can see the success message. It says that Shard2 of catcollection has been broken into three shards, as shown in the following SolrCloud graph:

The complete process of splitting a shard into two and moving it to a separate new node in SolrCloud is required in the following scenarios:

- Average query performance on a shard or slowing down of a number of shards. It is important to measure this regularly and keep track of the number of queries per second.

- Degradation of indexing throughput. This is a scenario wherein you were able to index 1000 documents per second earlier, but it goes down to say 800 documents per second.

- Out of memory errors during querying. Even after tuning - query, cache and GC.