Merging Solr with Redis

Solr indexing involves huge costs. Therefore, handling of real-time data is expensive. Every time a new piece of information comes into the system, it has to be indexed to be available for search. Another way of handling this is to break the Solr index into two parts, stable and unstable. The stable part of the index is contained inside Solr, while the unstable part can be handled by a plugin by extracting information from Redis. The unstable part of the index, which is now inside Redis, can handle real-time additions and deletions through an external script, which is reflected in the search results.

Redis is an advanced key value store that can be used to store documents containing keys and values in the memory. It offers advantages over Memcache, as it syncs the data onto disk and provides replication and clustering facilities. In addition to the storage of normal key values, it provides facilities to store data structures such as strings, hashes, lists, sets, and sorted sets. It also has a publisher-subscriber functionality built into the server.

In an advertising system, the Solr index can be used for searches based on the keyword, placement, and user profile or behavioral information. The data inside Redis can be used for filtering and sorting and contains the following information:

- Status of the ad, whether active or not

- Ad price and rank, which affects the CTR

- Ad contents, such as image path, link, or text to be displayed

The data inside Redis can be small or large depending on the type of advertisements. If an advertisement contains large images and text, it can bloat. However, since this data is outside Solr, it would not affect the search performance.

Since, we are creating a plugin for sorting and filtering using Redis, we need to decide where to place it. Solr provides two entry points for a plugin, ResponseWriter and SearchComponent.

ResponseWriter: This class is used for sending responses and is unsuitable for filtering and sorting of data.SearchComponent: This class is easy to implement and configure and contains aQueryComponentclass that can be easily modified. TheQueryComponentclass is the base for default searching.

We have learnt in , Solr Internals and Custom Queries how to write a query parser plugin. We first create a RedisQParserPlugin class, which extends the QParserPlugin class, and then override the createParser function:

public class RedisQParserPlugin extends QParserPlugin { @Override public QParser createParser (String qstr, SolrParams localParams, SolrParams params, SolrQueryRequest req) { return new QParser(qstr, localParams, params, req) { @Override public Query parse() throws SyntaxError { logger.info("Redis Post-filter invoked"); return new RedisPostFilter(); } }; } }Inside the parse function, we are calling RedisPostFilter, which does all the hard work.

The PostFilter interface provides a mechanism for filtering documents after they have already gone through the main query and other filters.

The RedisPostFilter class extends the ExtendedQueryBase class and implements the PostFilter interface. The APIs for PostFilter and ExtendedQueryBase can be accessed from the following URLs:

Let us also go through the code for the PostFilter:

public RedisPostFilter() { setCache(false); Jedis redisClient = new Jedis("localhost", 6379);In the constructor, we have disabled caching and are connecting to the Redis server on localhost port 6379. The post filter over here just filters the ads on the basis of their status, as active (online) or inactive (offline):

redisClient.select(1); onlineAds = redisClient.smembers("myList"); this.adsList = new HashSet<BytesRef>(onlineAds.size()); for (String ad : onlineAds) { this.adsList.add(new BytesRef(ad.getBytes())); }After connecting to the Redis server, we select a table (or index in terms of Redis) and get a list of all online ads from the Redis server. The same is added to the adsList set in the object.

Next, we define a function isValid, which checks whether the ad is valid or not:

public boolean isValid(String adId) throws IOException { return this.onlineAds.contains(adId); }We construct a delegatingCollector class, which is run after the main query and all filters but before any sorting or grouping collectors:

public DelegatingCollector getFilterCollector(final IndexSearcher indexSearcher) { return new DelegatingCollector() {We override two functions, setNextReader and collect, which gets the IDs from FieldCache (the search results on the index) and returns them to the parent's result Collector, respectively:

@Override public void setNextReader(AtomicReaderContext context) throws IOException { this.docBase = context.docBase; this.store = FieldCache.DEFAULT.getTerms(context.reader(), "id", false); super.setNextReader(context); } @Override public void collect(int docId) throws IOException { String id = context.reader().document(docId).get("id"); if (isValid(id)) { super.collect(docId); } }Inside the RedisPostFilter parameter, we override the getCache and getCost functions:

@Override public int getCost() { return Math.max(super.getCost(), 100); }The getCost function returns a value that is greater than 100:

@Override public boolean getCache() { return false; }The getCache function is required to be false for caching to be disabled, and getCost is required to be greater than 100. Only then would the post filter interface be used for filtering.

equals and hashCode are two methods that are overridden from the org.apache.lucene.search.Query abstract class. This extends the functionality of the Lucene search query.

In order to compile the code, we will need to use the following JAR files in our class path to handle the dependencies:

jedis-1.5.0.jarlog4j-1.2.16.jarlucene-core-4.8.1.jarsolr-core-4.8.1.jarslf4j-log4j12-1.6.6.jarslf4j-api-1.6.6.jarsolr-solrj-4.8.1.jar

Once compiled, we can create a JAR file using the following command:

$ jar -cvf redis.jar packt/* We will see the following output on the screen:

added manifest adding: packt/search/(in = 0) (out= 0)(stored 0%) adding: packt/search/RedisQParserPlugin$1.class(in = 1335) (out= 568)(deflated 57%) adding: packt/search/RedisQParserPlugin.class(in = 1179) (out= 524)(deflated 55%) adding: packt/search/RedisPostFilter.class(in = 2720) (out= 1497)(deflated 44%) adding: packt/search/RedisPostFilter$1.class(in = 2135) (out= 986)(deflated 53%)

In order to load the plugin, copy redis.jar and jedis-1.5.0.jar to the <solr_installation_dir>/example/lib folder and specify the library path in the solrconfig.xml file:

<lib dir="../../lib/" regex="redis\.jar" /> <lib dir="../../lib/" regex="jedis-1\.5\.0\.jar" />

We will need to define the implementation class in the solrconfig.xml file. This is an important glue to hook in the Redis post-filter implementation:

<queryParser name="redis" class="packt.search.RedisQParserPlugin" />

On starting Solr, we can see that the specified JAR files are loaded:

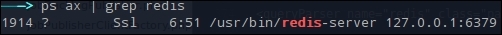

3110 [coreLoadExecutor-4-thread-2] INFO org.apache.solr.core.SolrResourceLoader – Adding 'file:/home/jayant/installed/solr-4.7.2/example/lib/redis.jar' to classloader Now restart the Solr server and check whether Redis is working on the localhost port 6379:

Redis server working

In order to call the filter, we will have to pass fq={!redis} to our Solr query:

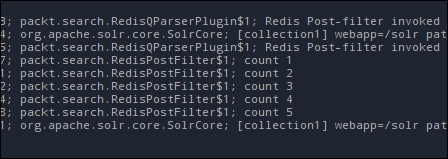

http://localhost:8983/solr/collection1/select?q=*:*&fq={!redis}The calls to RedisPostFilter can be seen in the Solr logs, as shown in the following image:

This plugin can be used to filter the ads on the basis of their status. Updates regarding the status of ads can be made into the Redis database through an external script. The actual implementation inside Solr can differ depending on the logic that you want to implement in the post filter.