Measuring the quality of search results

Now that we know what analyzers are and how text analysis happens, we need to know whether the analysis that we have implemented provides better results. There are two concepts in the search result set that determine the quality of results, precision and recall:

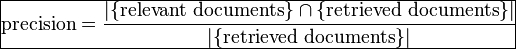

- Precision: This is the fraction of retrieved documents that are relevant. A precision of 1.0 means that every result returned by the search was relevant, but there may be other relevant documents that were not a part of the search result.

Precision equation

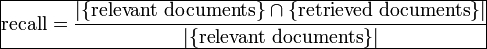

- Recall: This is the fraction of relevant documents that are retrieved. A recall of 1.0 means that all relevant documents were retrieved by the search irrespective of the irrelevant documents included in the result set.

Recall equation

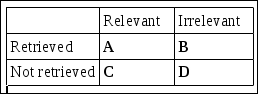

Another way to define precision and recall is by classifying the documents into four classes between relevancy and retrieval as follows:

Precision and recall

We can define the formula for precision and recall as follows:

Precision = A / (A union B) Recall = A / (A union C)

We can see that as the number of irrelevant documents or B increases in the result set, the precision goes down. If all documents are retrieved, then the recall is perfect but the precision would not be good. On the other hand, if the document set contains only a single relevant document and that relevant document is retrieved in the search, then the precision is perfect but again the result set is not good. This is a trade-off between precision and recall as they are inversely related. As precision increases, recall decreases and vice versa. We can increase recall by retrieving more documents, but this will decrease the precision of the result set. A good result set has to be a balance between precision and recall.

We should optimize our results for precision if the hits are plentiful and several results can meet the search criteria. Since we have a huge collection of documents, it makes sense to provide a few relevant and good hits as opposed to adding irrelevant results in the result set. An example scenario where optimization for precision makes sense is web search where the available number of documents is huge.

On the other hand, we should optimize for recall if we do not want to miss out any relevant document. This happens when the collection of documents is comparatively small. It makes sense to return all relevant documents and not care about the irrelevant documents added to the result set. An example scenario where recall makes sense is patent search.

Traditional accuracy of the result set is defined by the following formula:

Accuracy = 2*((precision * recall) / (precision + recall))

This combines both precision and recall and is a harmonic mean of precision and recall. Harmonic mean is a type of averaging mean used to find the average of fractions. This is an ideal formula for accuracy and can be used as a reference point while figuring out the combination of precision and recall that your result set will provide.

Let us look at some practical problems faced while searching in different business scenarios.