I/O bottlenecks, or I/O bottlenecks for short, are bottlenecks where your computer spends more time waiting on various inputs and outputs than it does on processing the information.

You'll typically find this type of bottleneck when you are working with an I/O heavy application. We could take your standard web browser as an example of a heavy I/O application. In a browser, we typically spend a significantly longer amount of time waiting for network requests to finish for things such as style sheets, scripts, or HTML pages to load as opposed to rendering this on the screen.

If the rate at which data is requested is slower than the rate at which it is consumed, then you have an I/O bottleneck.

One of the main ways to improve the speed of these applications is to either improve the speed of the underlying I/O by buying more expensive and faster hardware, or to improve the way in which we handle these I/O requests.

A great example of a program bound by I/O bottlenecks would be a web crawler. Now the main purpose of a web crawler is to traverse the web, and essentially index web pages so that they can be taken into consideration when Google runs its search ranking algorithm to decide the top 10 results for a given keyword.

We'll start by creating a very simple script that just requests a page and times how long it takes to request said web page, as follows:

import urllib.request

import time

t0 = time.time()

req = urllib.request.urlopen('http://www.example.com')

pageHtml = req.read()

t1 = time.time()

print("Total Time To Fetch Page: {} Seconds".format(t1-t0))

If we break down this code, first we import the two necessary modules, urllib.request and the time module. We then record the starting time and request the web page, example.com, and then record the ending time and print out the time difference.

Now, say we wanted to add a bit of complexity and follow any links to other pages so that we could index them in the future. We could use a library such as BeautifulSoup in order to make our lives a little easier, as follows:

import urllib.request

import time

from bs4 import BeautifulSoup

t0 = time.time()

req = urllib.request.urlopen('http://www.example.com')

t1 = time.time()

print("Total Time To Fetch Page: {} Seconds".format(t1-t0))

soup = BeautifulSoup(req.read(), "html.parser")

for link in soup.find_all('a'):

print(link.get('href'))

t2 = time.time()

print("Total Execeution Time: {} Seconds".format)

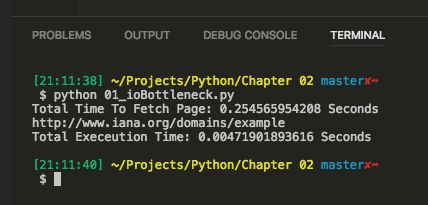

When I execute the preceding program, I see the results like so on my terminal:

You'll notice from this output that the time to fetch the page is over a quarter of a second. Now imagine if we wanted to run our web crawler for a million different web pages, our total execution time would be roughly a million times longer.

The real main cause for this enormous execution time would purely boil down to the I/O bottleneck we face in our program. We spend a massive amount of time waiting on our network requests, and a fraction of that time parsing our retrieved page for further links to crawl.